Written by Dr Damien Clarke

Head of Data Science

The Role of Radar in Modern Sensing and the Benefits of Sensor Fusion with Cameras and Lidar

In the rapidly evolving technological landscape of today, radar has emerged as one of the key components in many modern sensing systems. It is valued because of its ability to exhibit robust performance in challenging environments, including fog, rain, snow and darkness.

However, relying on a single sensor modality can have limitations and might not provide a complete solution. By integrating radar with cameras and/or lidar, a combined system can be produced that is greater than the sum of its parts. Sensor fusion provides greater environmental awareness and a more comprehensive picture of the surroundings. This leverages the individual strengths of each sensor to mitigate their weaknesses. The result enhances performance across a range of applications that require high levels of robustness, reliability and safety.

The Fundamentals of Radar Technology

Radar operates by transmitting radio waves and analysing the echoes received after these waves reflect from distant objects. By measuring the time delay and frequency shift of the reflected signals, radar can determine both the object’s distance and relative speed. This leads to several advantages:

- All-weather capability: By using Radio Frequency (RF) signals radar works in fog, rain, snow and darkness.

- Resistant to occlusions: RF signals can also penetrate some opaque surfaces, dirt on the antenna and even sometimes foliage.

- Long-range detection: Depending on the transmit power, radar can identify objects hundreds of meters away, or further.

- Speed estimation: Through the Doppler effect, radar can accurately measure velocity in a single measurement.

- Motion detection: Moving objects can be detected against static background clutter with minimal processing.

- Target classification: Micro-motion produced by a non-rigid body (e.g. wheels, rotors, arms, legs and other vibrations or rotations) produces a target specific micro-Doppler spectrum.

- Coherent processing: The ability to measure phase as well as magnitude allows small changes in the scene to be detected.

For these reasons, radars are widely used in many applications beyond just military systems, such as collision avoidance, automotive radars, space missions, radar altimeters for aircraft, maritime surveillance and perimeter security.

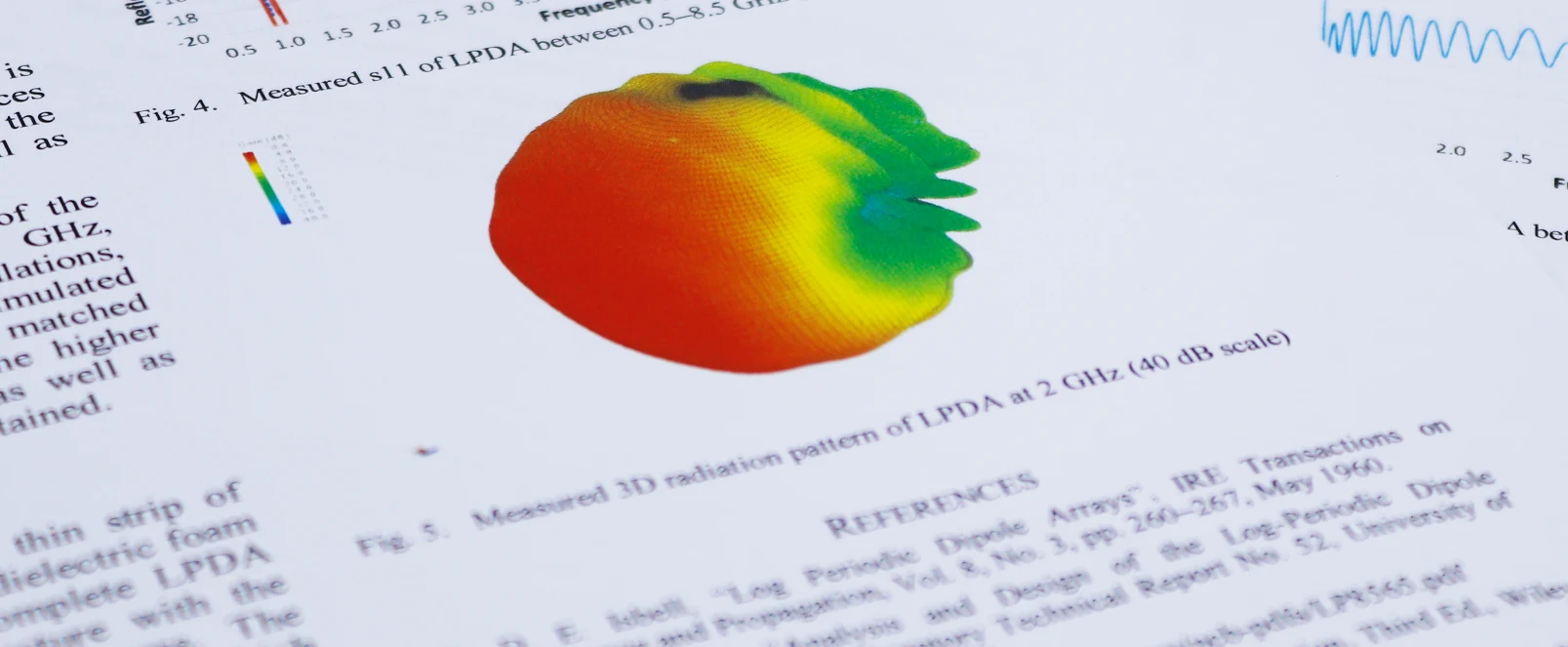

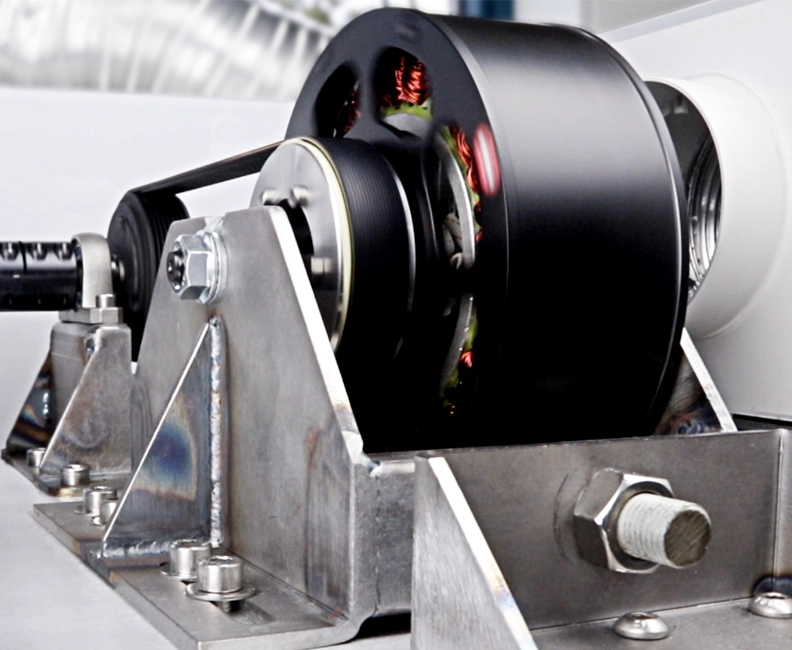

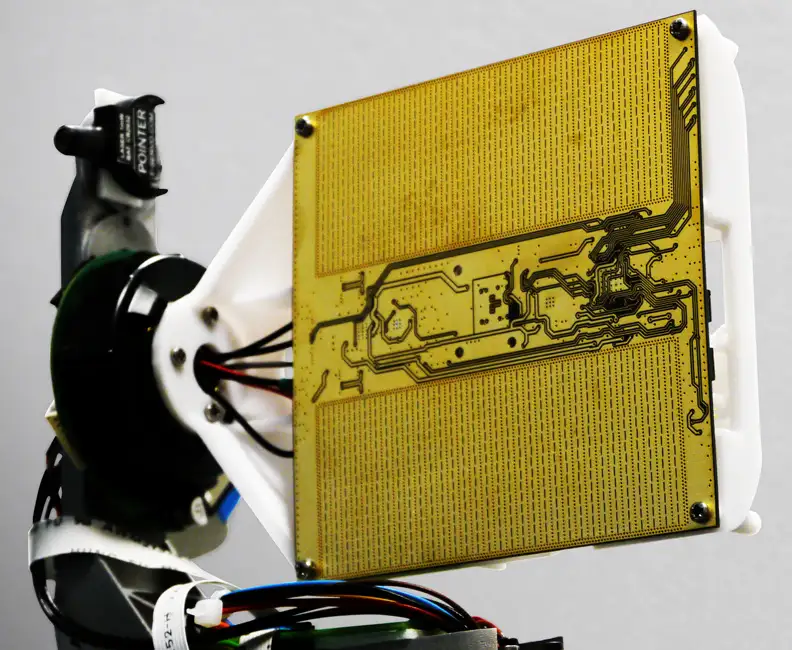

MIMO Radars

A particular type of radar often developed by Plextek is the Multiple-Input Multiple-Output (MIMO) radar. These use multiple transmitters and multiple receivers in an antenna array to transmit and receive signals from slightly different locations. By analysing the reflected signals, it is then possible to estimate the azimuth and/or elevation angle of the object. This information is in addition to the range and relative velocity information. With a sufficiently large antenna array it is even possible to produce an imaging radar. By using mmWave radars with shorter wavelengths angular resolutions of a few degrees can be produced on radars that are not much bigger than a smartphone.

Limitations of Radar

While radar has several advantages, it also has some limitations:

- Low spatial resolution: Radar typically lacks the spatial resolution needed to distinguish between closely spaced objects or resolve fine detail.

- Difficulty in classification: While micro-Doppler information can be sufficient for many applications it is not always suitable.

- Multipath and clutter issues: Environments with many reflective surfaces, such as urban environments, can produce false detections.

- Limited vertical discrimination: Radars that can discriminate in azimuth, elevation and range dimensions are available, but it is also common for radars to be biased towards the azimuth and range dimensions with reduced or no capability in elevation discrimination.

These limitations illustrate why fusing radar data with data from other sensors can be highly beneficial.

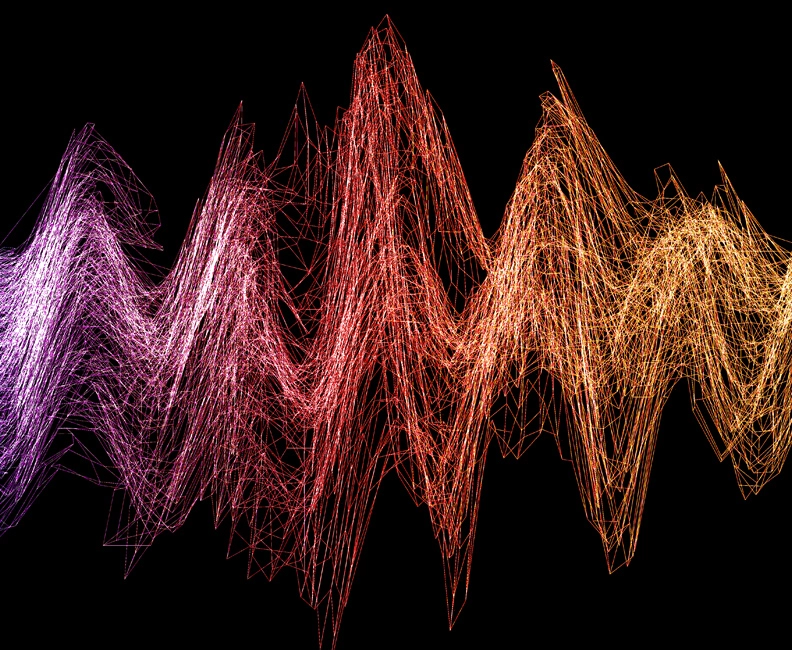

Introduction to Sensor Fusion

Sensor fusion is the process of combining data from multiple sensors with the aim of producing a better understanding of the surrounding environment than possible with any single sensor on its own. This is often achieved by using sensors with complementary strengths such that they can cover for each other’s weakness. For example, fusion of a colour image from a standard camera with a grayscale image from a thermal camera would aim to maintain colour when available for and then rely on the thermal cameras in darkness. This integration of sensors to enable more accurate information and better decision making can improve performance in a range of applications, including robotics and autonomous vehicles, or even satellites and lunar vehicles.

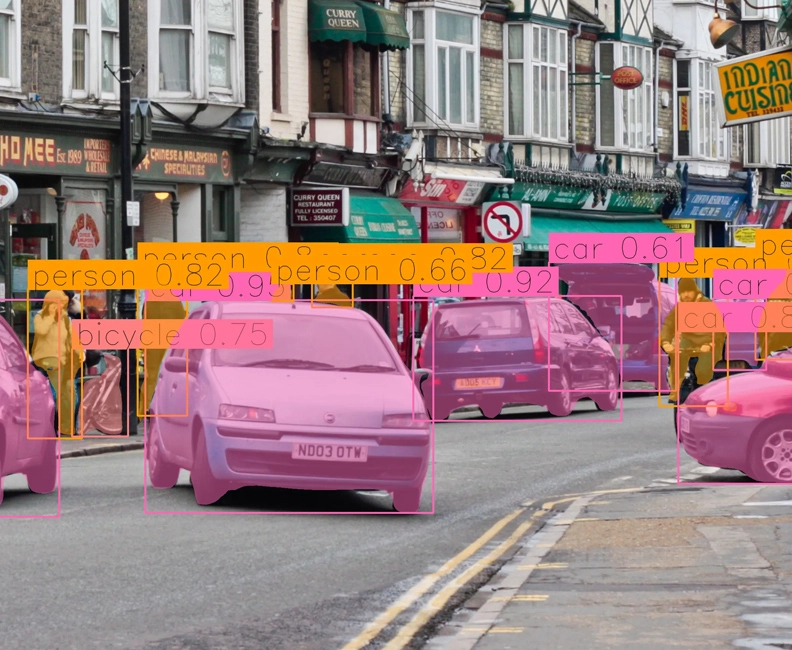

Radar and Camera Fusion

A common fusion example is the combination of a radar with a camera. Such a system can take advantage of the good range and velocity resolution of the radar in combination with the lateral resolution of the camera. Such a fused sensor that has good 3D spatial resolution in combination with high performance motion detection. This allows high quality classification and 3D localisation of objects. In addition, the colour information from the camera can also provide additional information under well-lit conditions but under low light conditions the radar will still operate as before. This is perhaps best illustrated in Advanced Driver Assistance Systems (ADAS) and Autonomous Vehicles where cameras can read road signs and interpret traffic lights while radars can robustly detect objects that pose a collision risk. In combination a superior sensor is produced that can be greater than the sum of its parts.

Radar and Lidar Fusion

Lidar is a similar technology to radar in that it enables the measurement of ranges. As with cameras, Lidar operates at a much shorter wavelength than radar which means it can achieve much finer spatial resolution for similar sized apertures. This ensures that Lidar is generally better at recognising 3D shapes than radar. In contrast, radar does not suffer problems with low reflectivity (i.e. black) materials or the presence of bright ambient light (i.e. sunlight) and is more robust in the presence of dust and other adverse environmental effects. Therefore, radar is typically has more robust performance in varied or unpredictable environmental conditions and can generally operate at longer ranges. As with radar/camera fusion, radar/Lidar fusion can produce a combined sensor that is superior to either sensor alone.

Tri-Sensor Fusion: Radar, Camera, and Lidar

The natural extension of this is to combine all three sensors (radar, cameras and Lidar) into a single system that has the best performance possible. This is often seen in autonomous vehicles where all three sensors are combined to benefit from their individual strengths:

- Radar: Velocity and distance information

- Camera: Object recognition

- Lidar: 3D information

This approach accepts that every sensor has its limitations and that relying on a single sensor in complex environments can produce an unacceptable level of risk. In contrast, the use of multiple sensors reduces the likelihood that a single failure mode can affect all sensors simultaneously causing an undesirable outcome. Integrating multiple dissimilar and redundant sensors into a single sensor can therefore significantly improve robustness in critical applications. This is especially true for singular events where failure can produce catastrophic outcomes, such as lunar lander missions.

Challenges in Sensor Fusion

The first challenge for sensor fusion is simply being able to operate multiple types of sensors simultaneously. This may increase the total size, weight, power consumption and cost beyond what is acceptable. Furthermore, when the sensors are operating there will be calibration, synchronisation and data volume challenges.

Once the sensors are installed and operating optimally, it then becomes necessary to implement the fusion algorithms. The design of the algorithms can be a challenge, depended on the nature of the application. This can involve choices about how to appropriately weight the sensor inputs when different sensors disagree. For many applications, there is also the additional constraint of performing fusion in real-time which can require significant processing hardware if multiple sophisticated sensors are involved.

Future Outlook

As radar technology advances it is becoming more common for radars to measure range and velocity as well as both azimuth and elevation angles. This is often called 4D radar. These sometimes have larger antenna arrays with greater numbers of transmitters and receivers which allows these radars to output higher resolution data. While such 4D imaging radars can potentially operate as single sensors in many circumstances, it is almost certainly beneficial to fuse the radar data with data from another sensor if possible.

As sensor data becomes larger and ever more complex, the challenge of developing a fusion algorithm becomes even greater. AI-enhanced fusion using machine learning techniques to produce optimal outputs for varied applications under a wide range of environmental conditions is one approach for addressing this issue.

Conclusion

Sensor fusion enhances situational awareness and decision-making in intelligent and autonomous systems by integrating multiple sensors and advanced processes. The fusion of radar, camera, and/or Lidar sensors addresses challenges such as low spatial resolution and classification difficulties, ensuring optimal performance under varying environmental conditions. By providing accurate situational awareness in adverse environments, sensor fusion proves its value across a range of applications and highlights the effectiveness of AI-driven processes.

Ready to implement advanced sensor fusion? Contact our team of experts to discuss your multi-modal sensing requirements

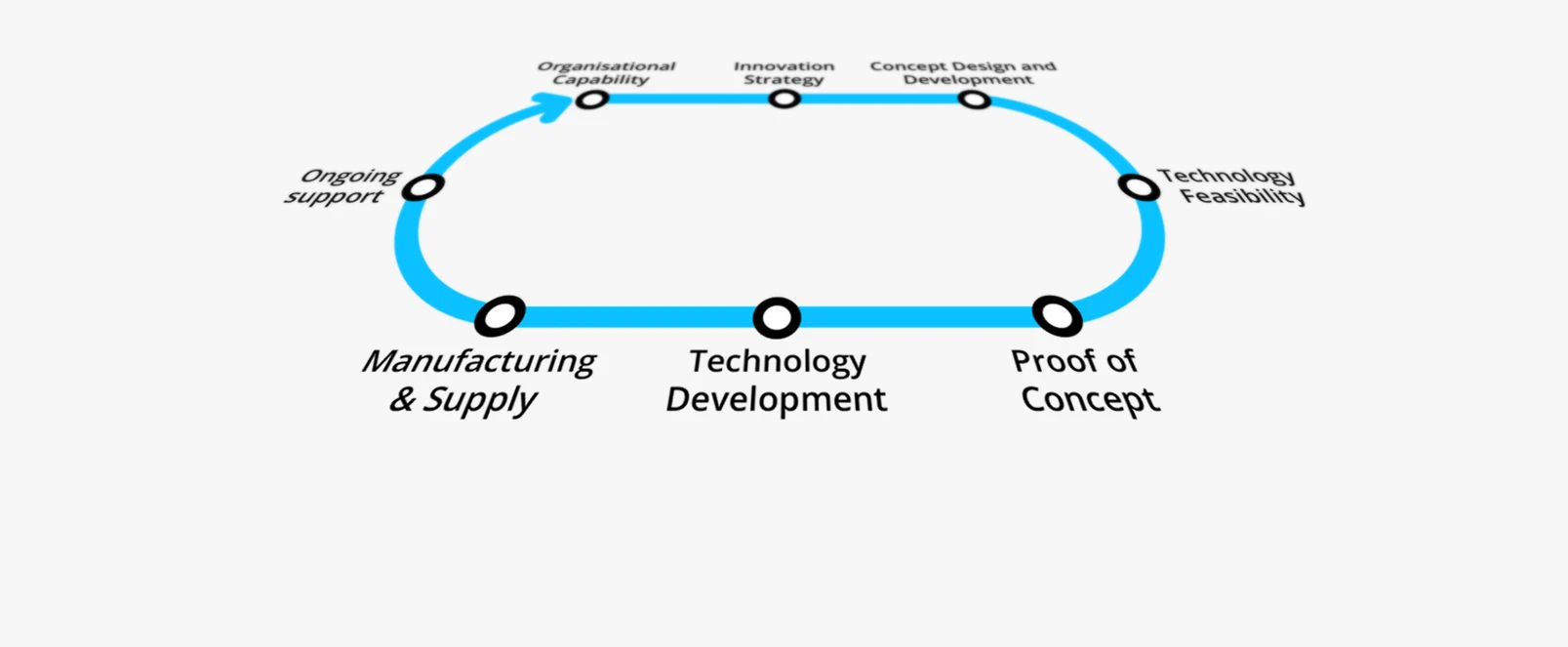

Sensor Fusion

Sensor fusion development combining radar, camera & lidar data for autonomous vehicles, robotics, space, security & more.

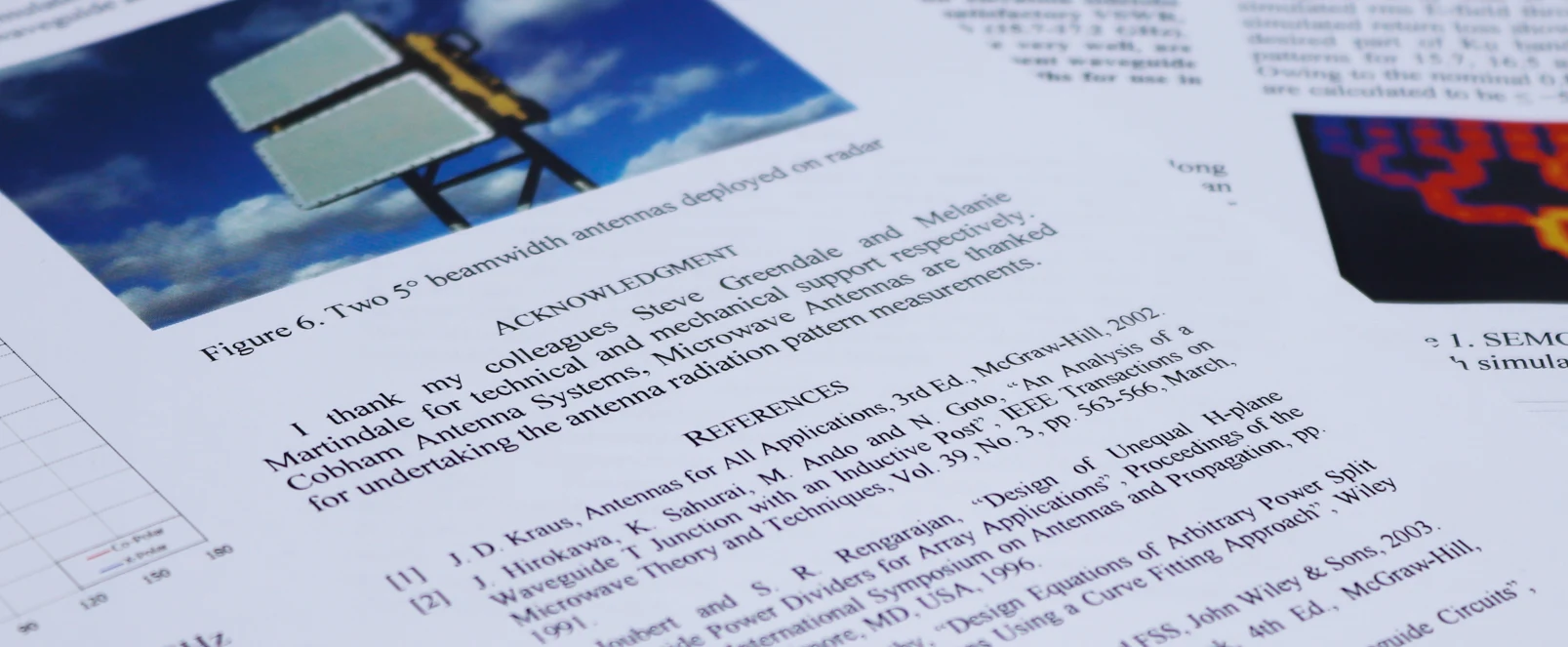

Radar

Plextek’s mmWave technology platform enables rapid development and deployment of custom radar solutions at scale and pace.