The Challenge

AI object detection models for colour imagery are widely available. These have been trained on large quantities of imagery that was painstakingly manually labelled. Similar performance should be achievable with an AI model trained on thermal imagery, if only such a labelled data set existed. Manually labelling a custom data set is possible but time consuming. For example, producing the well-known Common Objects in Context (COCO) dataset took 22 hours per 1000 segmented objects.

The challenge: develop a method to create large-scale labelled thermal image datasets without the high cost of manual annotation.

The Approach

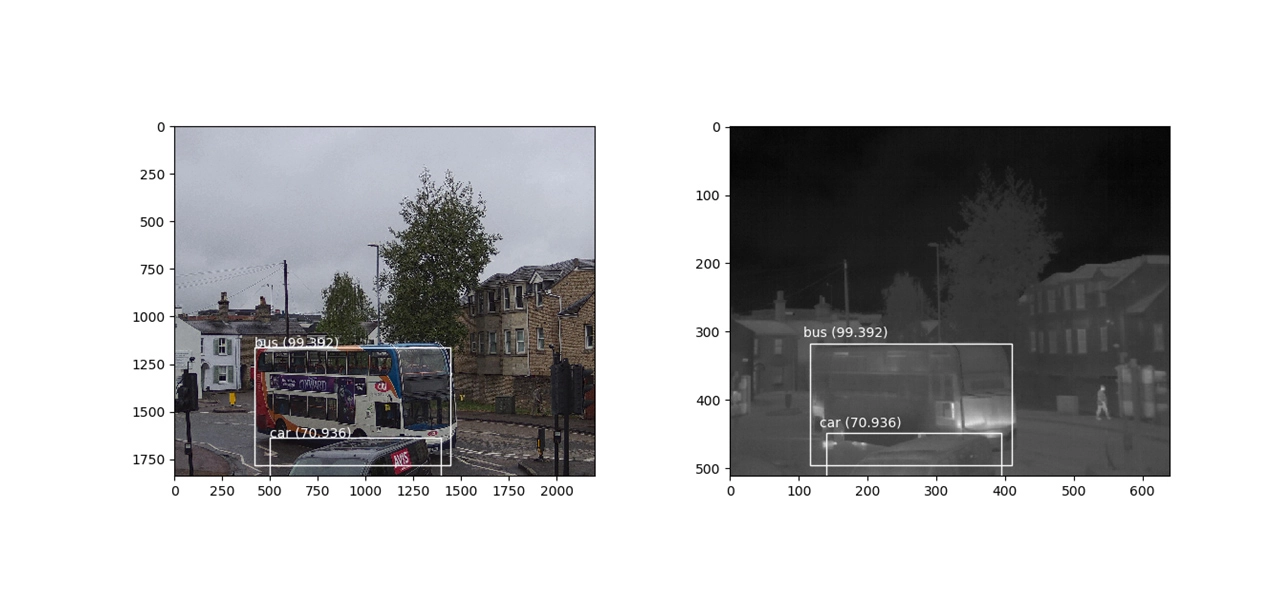

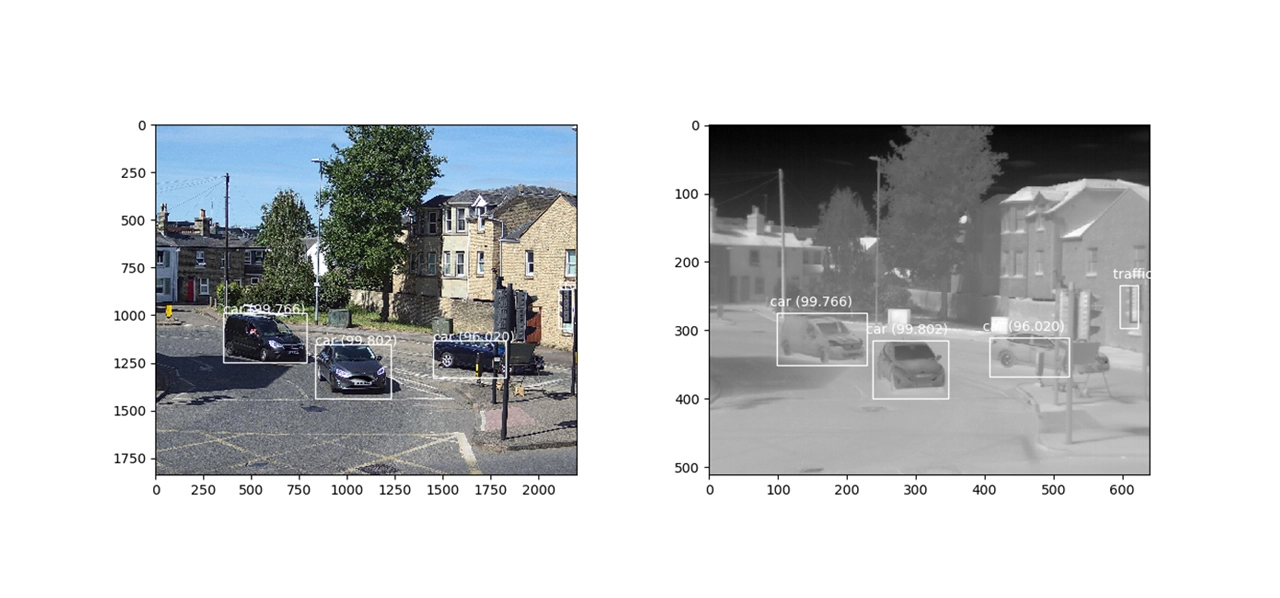

Plextek developed an automated approach: by co-locating a colour camera and thermal imager with the same field of view, we collected many synchronised image pairs of an identical scene during the day. An AI object recognition model was then used to provide labelled boxes for the scene content in the colour images. This produced 12,392 labelled boxes across 4639 images. Due to the AI model producing performance comparable to a human under well-lit conditions, these labelled boxes could be transferred to the thermal image and used as a source of reliable ground truth. This only required that the colour image was registered to the thermal image so that the pixel positions were equivalent.

The resulting labelled thermal images were then used to train an AI model to detect and classify objects within thermal images. While these images could be used to train an image from scratch, a better approach was to use transfer learning to retrain an existing AI model that works on colour images. This had the advantage of leveraging the hundreds of thousands of colour images used to train the AI model to understand edges and textures within a general image. The much smaller number of IR images was then used to teach the AI model how specific patterns of IR edges and textures corresponds to particular objects of interest (i.e. the labelled boxes).

The Outcome

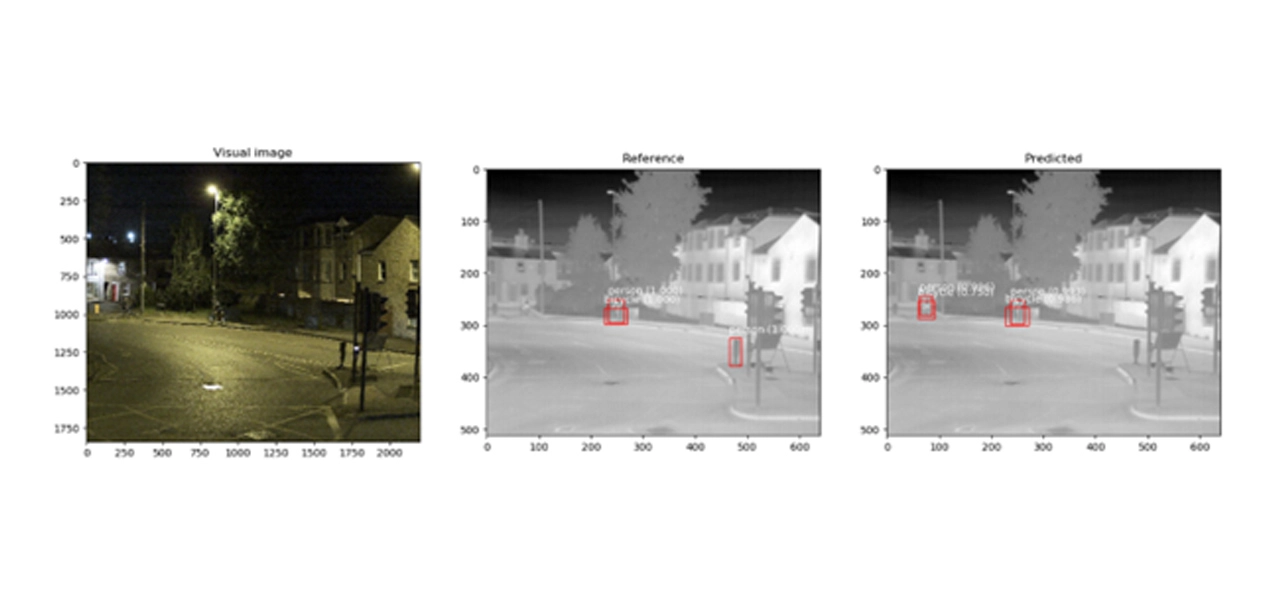

After retraining the AI model on thermal images, its performance was assessed on imagery collected at night, which wasn’t in the training data set. The presence of streetlights meant that the colour camera could still be used to detect objects within the lit parts of the image, however, there were some false negatives when objects moved within less well-lit regions. There were also misclassifications, such as a bollard being classified as a person.

Middle: A thermal image with bounding boxes produced from the colour image.

Right: A thermal image with bounding boxes produced directly from the thermal image itself using the retrained AI model.

In contrast, the retrained AI model successfully detected people in thermal images with significantly reduced false alarms. There were also fewer misclassifications as bollards do not look like people in thermal imagery.

This improved performance is not unexpected as thermal cameras should work better than a colour camera at night, even with streetlights. However, the key benefit lay in the fact that the ground truth data used for training the AI model on thermal images was generated automatically without a significant manpower requirement. This addresses an important bottleneck in developing automated AI systems which could even prevent an AI model being developed at all.

Partner with Plextek for AI and Sensor Integration

Plextek combines deep expertise in multi-sensor systems integration with AI and Transfer Learning. Our end-to-end approach, from constructing combined sensor systems, to training AI models for sensor related tasks and beyond, enables fast accurate development of your AI systems. If you’re facing similar challenges in developing AI models with limited training data, we’d welcome the opportunity to discuss a solution.

Get in touch to discuss your requirements