We build the infrastructure to manage your data, use machine learning to interpret your data, and create a user interface to visualise and present it.

Data Exploitation

Our expertise is to apply technology that meets and grows with data requirements, from custom sensor applications to massive IoT cloud deployments.

Key Skills

- Machine Learning, Neural Networks, Artificial intelligence, Deep belief networks, Graph Based Methods, Clustering Algorithms, Supervised Classification, Unsupervised Classification, Deep learning, Dempster-Shafer Theory, Transferable Belief Model, Classification, Pattern recognition, Bayesian classification, Data fusion, Clustering

- Databases, Big Data, RDBMS, SQL Server, MySQL, MongoDB, Apache Hadoop, GIS, GEOINT

- Data visualisation, Image processing, Stereo Vision, Communications, ESM, ECM

- Cloud, Microsoft Azure, Microsoft AWS, Web Development, Application Development

- Multivariate analysis, Doppler analysis, Global Optimisation, Statistics, Spectroscopy, Radar, Physical modelling, Sensor Analysis, Target tracking, Anomaly Detection, Particle filtering, Kalman filtering

- Sensor Output Fusion, Knowledge Extraction, Enhanced situational awareness, Fleet management, IoT, Business intelligence, Industry 4.0

- ASP MVC, WPF, .NET, C#, JavaScript, Ajax, JQuery, REST, SOAP, WCF, IDL, Matlab, Python, Keras, Tensorflow

Matthew Roberts

Senior Consultant, Data Exploitation

“There’s a wealth of data available from a large variety of sources and making sense of it all is critical to success. I find it fascinating that computers can sift through enormous quantities of data to spot patterns that humans can’t and yet a child can outperform a computer in some relatively simple problems. Artificial intelligence is progressing at a rapid pace, constantly pushing the boundary between the possible and the impossible.”

Project

Benefits Of Using Data in IoT

IoT is all about data, and there are only a few things we need to do with our data: (1) we need to get data from our IoT sensor module and into our database, (2) we need to make decisions based on our data, (3) we need to visualise our data, and (4) sometimes we might need to get new data back to our sensor. In concept, it doesn’t sound that difficult, but in reality, the total man-years of software development are extraordinary. Fortunately, and in the last decade, the building blocks have all become commoditised.

If we take LoRa Technology as an example, here we have a UNB radio and software stack enabling long-range data transport ideal for remote monitoring applications. A decade ago this would need to have been developed in-house, but today it’s all just off-the-shelf equipment. Likewise, an IoT deployment needs reliable and scalable data services, and in the past, we have developed systems to be just that. But today, and just like LoRa, it’s all changed and now we build a new system with commodity cloud data capability: everything from IoT message queues, to off-the-shelf machine learning, and database capacity that scales with just the push of a button.

At first glance, it would seem that commoditization has made everything so much easier and that’s because building a system is just a case of putting the Lego bricks together, but of course, they are rather complicated Lego bricks that very rarely fit together! A good example of complexity is a LoRa system we built for measuring power consumption. Here our data starts as an analogue signal, moves through analogue signal conditioning, to A/D, to STM embedded, to the LoRa stack, to the LoRa gateway, to the LoRa Server running Node-Red code, to the Web Socket, to the IoT Hub, to the database, to the MVC web framework, through to AJAX, and finally to HTML, CSS, and JavaScript. Every step is trivial, but it’s quickly putting the path together and knowing how to make it secure, reliable, and scalable that presents the challenge.

Luckily, that’s exactly the challenge that our Data, Sensor, and IoT teams are here to meet.

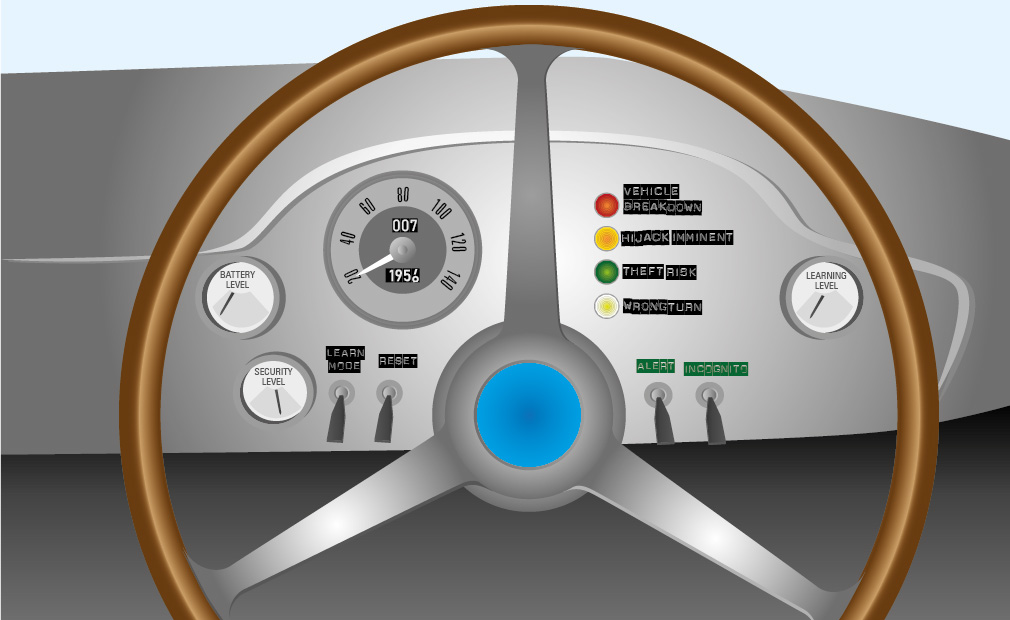

Using Patterns of Life For Driver Identification

We have been developing our capability in machine learning methods and in this project we were engaged to look at the problem of detecting anomalous driver behaviour in a security context where only a limited time frame of vehicle tracking data was available. Having looked at the various techniques that could be applied, including Deep Belief Networks (DBN), it was apparent that these multi-level complex networks could not learn each driver’s pattern of life from so little data. We eventually settled on using a Restricted Boltzmann Machine (RBM) per driver as they could be trained quickly and generalised well.

The trained RBMs were then used to detect anomalous events within the routes and stops made by drivers. The anomalies detected could then provide information into the human operator’s decision process as to whether the event was a safety or security issue. For example, the detection of an anomalous stop may indicate vehicle breakdown or hijacking, the detection of two vehicles stopping together in an unusual place may indicate swapping of goods, the detection of an anomalous turn may be a driver mistake that takes them into an insecure area. With more available data the system could be extended to learn more subtle features within the driver’s pattern of life. Thus alerts for more types of anomalies could be provided.

This work showed that machine learning can fulfil an important support role to human operators within a relatively short time from deployment by alerting them to unusual events of significance. The operator then makes the decisions about potential consequences of the event and any actions that should be taken. Thus a co-operative working between human and machine is enabled with each carrying out the tasks for which they are best equipped

Get In Touch

Let us know what’s on your mind and someone will reply as soon as possible.